Causal Inference and Policy Learning

Learning interpretable rules while accounting for biases from observational data

From data to decisions

The key difficulty in prescription problems is lack of counterfactuals - we only see what happened under the treatment assigned in the data. In order to make informed decisions, we need to be able to accurately estimate the outcomes under different treatment options.

Our Reward Estimation and Optimal Policy Trees modules address this issue from a rigorous causal inference perspective, delivering unbiased and understandable decision rules directly from observational data.

| X | T | Y | |||

|---|---|---|---|---|---|

| x11 | x12 | A | y1A | ? | ? |

| x21 | x22 | A | y2A | ? | ? |

| x31 | x32 | B | ? | y3B | ? |

| x41 | x42 | C | ? | ? | y4A |

The problem of counterfactual estimation: given features X and treatment T, we only observe the outcome Y under the assigned treatment, but need to estimate the rest properly

Estimating counterfactuals from observational data

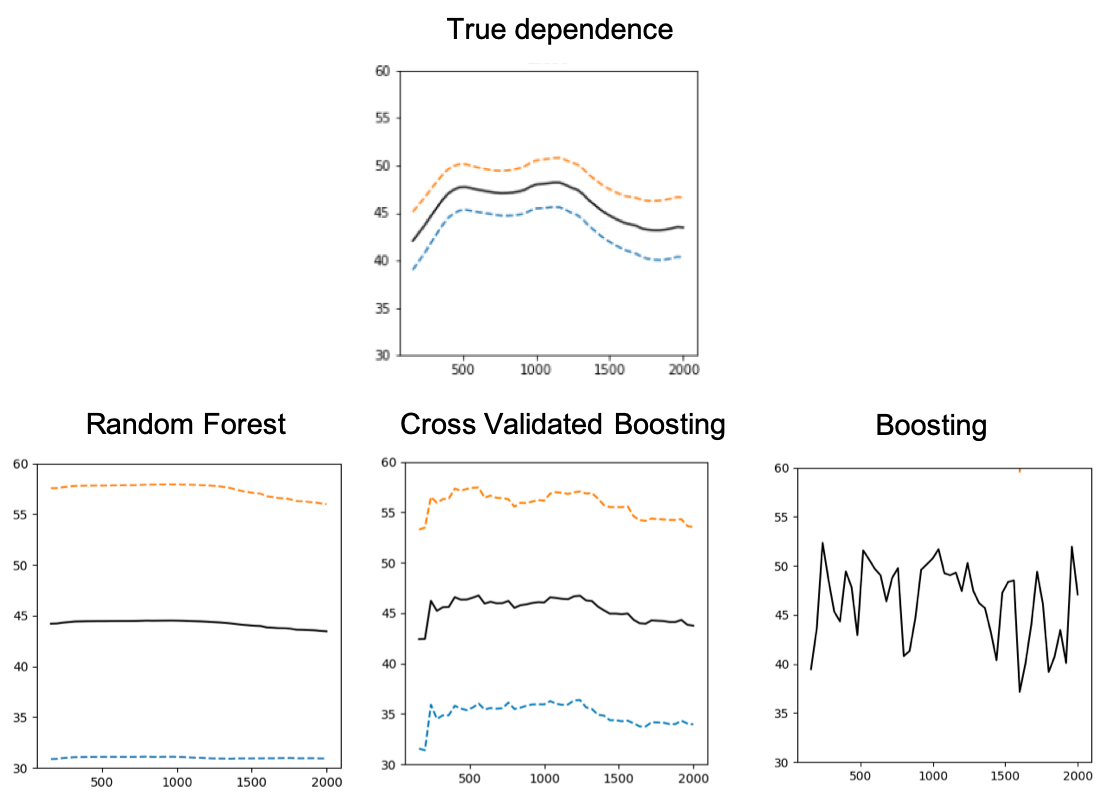

A naive and commonly-used approach is to train machine learning models to predict the outcome directly from the features and treatment, but depending on the choice of model class and parameters, the estimated treatment effects can vary drastically, and we have no way of telling which relationships are correct.

The field of causal inference is a rich literature spanning over 30 years that deals with the task of capturing the true causal dependence between the treatment application and the outcome. We leverage the most recent developments to properly adjust for treatment assignment bias and model misspecification errors.

Based on data from a recent study, we show that models with similar predictive performance can still estimate treatment-response curves that are far from the truth, depending on the model class (random forest or boosting) and the parameter tuning procedure.

Optimal Policy Trees learn understandable treatment rules

Compared to alternatives such as Regress-and-Compare or Causal Forests, the Optimal Policy Trees deliver an interpretable decision rule that is less prone to overfitting, and allows for the incorporation of more advanced causal inference techniques. In a recent paper, we show that these optimal trees outperform their greedy counterparts and are on par with the black-box methods in terms of performance.

Example Policy Tree for marketing in investment management, which assigns best interaction to maximize fund inflow

Flexible, practical and easy to use

The software provides a simple API that automatically and carefully handles the nuances of conducting the reward estimation process, allowing practitioners to focus on the machine learning task at hand rather than dealing with the intricacies and complexities of implementation.

Optimal Policy Tree prescribing diabetes treatments, considering multiple continuous-dose treatments such as insulin, metformin, and oral drugs

Selected cases using Optimal Policy Trees

Optimal Pricing at Grocery Stores

Retail

Revenue maximization via optimal pricing policies based on purchase history

Pricing of Exchange-Traded Assets

Finance & Insurance

Predicting the fair market price of a financial instrument

Personalized Diabetes Management

Healthcare

Recommending personalized treatments for diabetes patients

Marketing Recommendations that Maximize Fund Flow

Finance & Insurance

Personalized marketing strategies learned from historical interactions and outcomes in investment management